The other day I posted an article discussing my issue tracking down a bug in a

ClickOnce application. I had noted that once I made a change to

maxRequestLength in my web.config file the issue went away. Well, that

change was not the real solution.

While the maxRequestLength does help the application pull down the query at

times, it was not what corrected the original problem! This morning as I was

continuing to work on the application, I had gotten the same 504 error (which by

the way you can read the original article

here)

I thought I had corrected. Since IIS was not sending me any vital information

other than the 504 timeout I had to find another way to catch the bug.

I had to add another entry into my web.config file to catch any possible

errors my services were throwing out that were not being logged in IIS or

Fiddler. Sure enough, I was able to log a trace that showed me the root of the

504 problem. Before I tell you what the problem was with my program, I would

like to take you through build a tracer similar to the one I used.

Let’s start building our tracer. First we drop into a system.diagnostics

block. The system.diagnostics block is in charge of catching various trace

messages and outputting them somewhere of our choosing, so it will contain child

tags that will control how it operates. For reference, you can read all about

the system.diagnostics namespace on

MSDN.

<system.diagnostics>

<!--

(Here be dragons)

-->

</system.diagnostics>

Now we will add another line to our XML snippet. The trace tag, with the

property of autoflush=”true” is a class in charge of tracing through methods

in your code.

Autoflush

will define if flush is called after every write (if it is set to true; see the

link for the full MSDN specifications). So now our XML looks as so:

<system.diagnostics>

<trace autoflush="true" />

<!--

(Here be dragons)

-->

</system.diagnostics>

So now we step down into our sources, and define a source for diagnostics. In

this case our source will have a name of “System.ServiceModel”. This is defined

on MSDN as:

System.ServiceModel: Logs all stages of WCF processing, whenever configuration

is read, a message is processed in transport, security processing, a message

is dispatched in user code, and so on.

Source: https://msdn.microsoft.com/en-us/library/ms733025.aspx

We then define our switchValue, which will capture any messages matching the

values. Possible values are:

- Off

- Critical

- Error

- Warning

- Information

- Verbose

- ActivityTracing

- All

- …more details on each level can be found on

MSDN

In this case we want to catch message that are “Information” or

“ActivityTracing” operations. The propagateActivity setting determines

whether the activity should be followed to other endpoints that take part in the

exchange. By setting this to true, you can take trace files generated by any two

endpoints and see how a set of traces on one end flowed to a set of traces on

another end. Our XML now looks like this:

<system.diagnostics>

<trace autoflush="true" />

<sources>

<source name="System.ServiceModel" switchValue="Information, ActivityTracing" propagateActivity="true">

<!--

(Here be dragons)

-->

</source>

</sources>

</system.diagnostics>

We are almost done defining the XML for our trace operations. Now we just need

to add the actual listener that will catch anything that is sent from the source

we defined. We will add an XmlWriterTraceListener to save the information back

to something. We will be naming it “std”, defining the listener as

System.Diagnostics.XmlWriterTraceListener, and we instruct it to save a file

to the website root as “Server.e2e”. Our XML for tracing is now complete!

<system.diagnostics>

<trace autoflush="true" />

<sources>

<source name="System.ServiceModel" switchValue="Information, ActivityTracing" propagateActivity="true">

<listeners>

<add name="sdt" type="System.Diagnostics.XmlWriterTraceListener" initializeData="Server.e2e"/>

</listeners>

</source>

</sources>

</system.diagnostics>

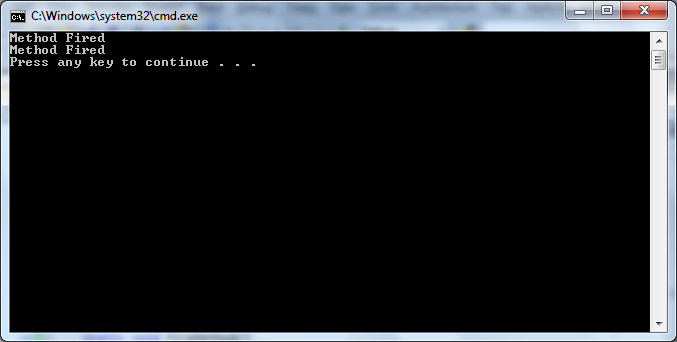

We then go back to the application (after recycling the App Pool in IIS that

hosts the application at fault), launch the ClickOnce program, and trigger the

error to occur. When the error finally does occur all you have to do is pull

down the file the listener wrote to (in our case Server.e2e) and open it up with

the Microsoft Service Trace Viewer which is located in

All Programs -> Visual Studio 2010 -> Microsoft Windows SDK Tools. You

should see any errors that are occurring, and hopefully the one that you need to

know to correct the problem!

After I had my output opened in the Service Trace Viewer I found the original

problem. In my case the problem was listed as:

EXCEPTION TYPE:

System.Data.OracleClient.OracleException, System.Data.OracleClient,

Version=4.0.0.0, Culture=neutral, PublicKeyToken=xxxxx

MESSAGE:

ORA-12154: TNS:could not resolve the connect identifier specified

My application was dying off because IIS was not able to return a result to it.

The reason IIS was unable to return an invalid result was caused by an Oracle

database not returning any data. In our QA environment at Mercer, we have a

clustered Oracle database configuration. When IIS made a query to the Oracle

cluster, it had a 50/50 chance of hitting a server that did not want to respond.

This is why the application worked some of the time and then would crash other

times.

The quick fix? Tell the application to only hit the good Oracle server.

The long-term, correct fix to make? Obviously get the Oracle cluster in QA

corrected. Then rebuild the service in IIS to understand this type of error, and

relay back a better message to the client. The client ClickOnce application will

then need to understand this new message, and throw up an error message instead

of locking up.

Hopefully this article is of use to understanding how to trace events in your

applications and/or provide another viewpoint to use when you have an

intermittent issue like I ran into.